Russ Allbery: Review: Finder

| Series: | Finder Chronicles #1 |

| Publisher: | DAW Books |

| Copyright: | 2019 |

| ISBN: | 0-7564-1511-X |

| Format: | Kindle |

| Pages: | 391 |

| Series: | Finder Chronicles #1 |

| Publisher: | DAW Books |

| Copyright: | 2019 |

| ISBN: | 0-7564-1511-X |

| Format: | Kindle |

| Pages: | 391 |

meta-data. In a previous post, we have already described how one can set meta-data to data that are already loaded, and how one can make use of them. QSoas is already able to figure out some meta-data in the case of electrochemical data, most notably in the case of files acquired by GPES, ECLab or CHI potentiostats. However, only a small number of constructors are supported as of now[1], and there are a number of experimental details that the software is never going to be able to figure out for you, such as the pH, the sample, what you were doing... The new version of QSoas provides a means to permanently store meta-data for experimental data files:

QSoas> record-meta pH 7 file.datThis command uses record-meta to permanently store the information

pH = 7for the file

file.dat. Any time QSoas loads the file again, either today or in one year, the meta-data will contain the value 7 for the field pH. Behind the scenes, QSoas creates a single small file, file.dat.qsm, in which the meta-data are stored (in the form of a JSON dictionnary).

You can set the same meta-data to many files in one go, using wildcards (see load for more information). For instance, to set the pH=7 meta-data to all the .dat files in the current directory, you can use:

QSoas> record-meta pH 7 *.datYou can only set one meta-data for each call to record-meta, but you can use it as many times as you like. Finally, you can use the

/for-which option to load or browse to select only the files which have the meta you need:

QSoas> browse /for-which=$meta.pH<=7This command browses the files in the current directory, showing only the ones that have a pH meta-data which is 7 or below.

[1] I'm always ready to implement the parsing of other file formats that could be useful for you. If you need parsing of special files, please contact me, sending the given files and the meta-data you'd expect to find in those. About QSoas QSoas is a powerful open source data analysis program that focuses on flexibility and powerful fitting capacities. It is released under the GNU General Public License. It is described in Fourmond, Anal. Chem., 2016, 88 (10), pp 5050 5052. Current version is 3.0. You can download its source code there (or clone from the GitHub repository) and compile it yourself, or buy precompiled versions for MacOS and Windows there.

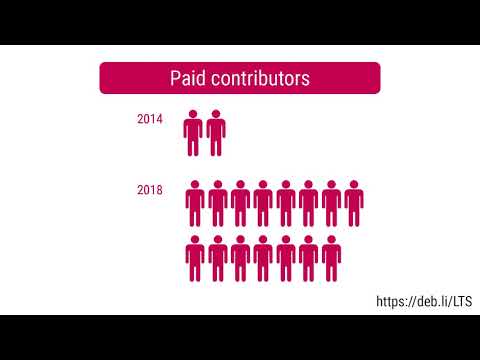

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

Debian project funding

In January, we put aside 2175 EUR to fund Debian projects.

As part of this Carles Pina i Estany started to work on

better no-dsa support for the PTS

which recently resulted in two

merge

requests which will hopefully be deployed soon.

We re looking forward to receive more projects from various

Debian teams! Learn more about the rationale behind this initiative in this article.

Debian LTS contributors

In January, 13 contributors have been paid to work on Debian LTS, their reports are available:

Like each month, have a look at the work funded by Freexian s Debian LTS offering.

Debian project funding

In January, we put aside 2175 EUR to fund Debian projects.

As part of this Carles Pina i Estany started to work on

better no-dsa support for the PTS

which recently resulted in two

merge

requests which will hopefully be deployed soon.

We re looking forward to receive more projects from various

Debian teams! Learn more about the rationale behind this initiative in this article.

Debian LTS contributors

In January, 13 contributors have been paid to work on Debian LTS, their reports are available:

A new release 0.2.3 of the RcppSMC package arrived on CRAN earlier today. Once again it progressed as a very quick pretest-publish within minutes of submission thanks CRAN!

RcppSMC provides Rcpp-based bindings to R for the Sequential Monte Carlo Template Classes (SMCTC) by Adam Johansen described in his JSS article. Sequential Monte Carlo is also referred to as Particle Filter in some contexts.

This release somewhat belatedly merges a branch Leah had been working on and which we all realized is ready . We now have a good snapshot to base new work on, as maybe with the Google Summer of Code 2021.

A new release 0.2.3 of the RcppSMC package arrived on CRAN earlier today. Once again it progressed as a very quick pretest-publish within minutes of submission thanks CRAN!

RcppSMC provides Rcpp-based bindings to R for the Sequential Monte Carlo Template Classes (SMCTC) by Adam Johansen described in his JSS article. Sequential Monte Carlo is also referred to as Particle Filter in some contexts.

This release somewhat belatedly merges a branch Leah had been working on and which we all realized is ready . We now have a good snapshot to base new work on, as maybe with the Google Summer of Code 2021.

Courtesy of my CRANberries, there is a diffstat report for this release. More information is on the RcppSMC page. Issues and bugreports should go to the GitHub issue tracker. If you like this or other open-source work I do, you can now sponsor me at GitHub.Changes in RcppSMC version 0.2.3 (2021-02-10)

- Addition of a Github Action CI runner (Dirk)

- Switching to inheritance for the moveset rather than pointers to functions (Leah in #45).

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. Please report excessive re-aggregation in third-party for-profit settings.

Here is my monthly update covering what I have been doing in the free software world during December 2020 (previous month):

Here is my monthly update covering what I have been doing in the free software world during December 2020 (previous month):

README (#25).circlator, dvbstreamer, eric, jbbp, knot-resolver, libjs-qunit, mail-expire, osmo-mgw, python-pyramid, pyvows & sayonara.

debian/copyright file to match the copyright notices in the source tree. (#224).py copyright headers. [...]readelf(1). [...]minimal instead of basic as a variable name to match the underlying package name. [...]pprint.pformat in the JSON comparator to serialise the differences from jsondiff. [...]python-django:

2.2.17-2 Fix compatibility with GNU gettext version 0.21. (#978263)3.1.4-1 New upstream bugfix release.redis:

mtools (4.0.26-1) New upstream release.

adminer (4.7.8-2) on behalf of Alexandre Rossi and performed two QA uploads of sendfile (2.1b.20080616-7 and 2.1b.20080616-8) to make the build the build reproducible (#776938) and to fix a number of other unrelated issues.

Debian LTS

This month I have worked 18 hours on Debian Long Term Support (LTS) and 12 hours on its sister Extended LTS project.

awstats, imagemagick, node-ini, openexr, openssl1.0, p11-kit, pypy, python-py, sqlite3, sympa, etc.

node-ini, an .ini configuration file format parser/serialiser for Node.js, where an application could be exploited by a malicious input file.

Formal sector issues

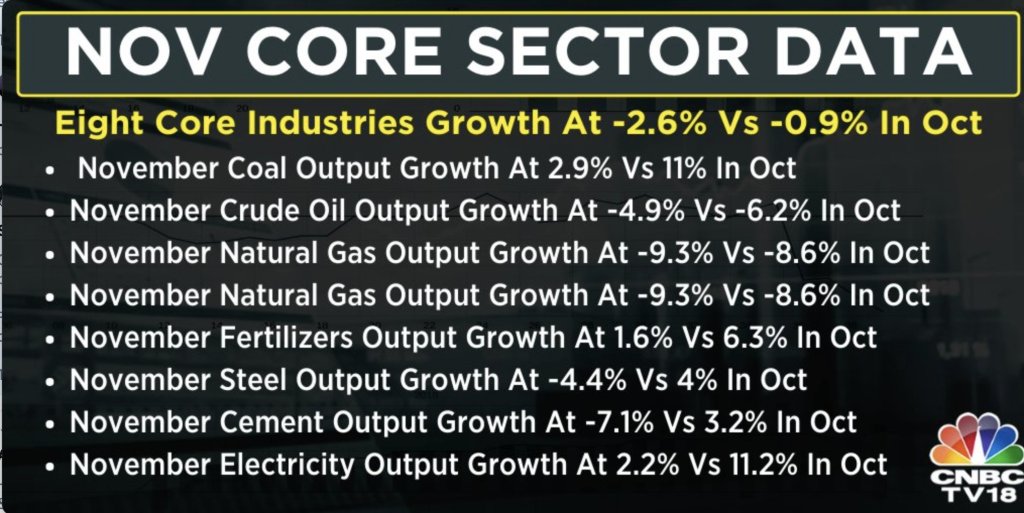

Just today it was published that output from eight formal sectors of the economy who make the bulk of the Indian economy were down on a month to month basis . This means all those apologists for the Government who said that it was ok that the Govt. didn t give the 20 lakh crore package which was announced. In fact, a businessman from my own city, a certain Prafull Sarda had asked via RTI what happened to the 20 lakh crore package which was announced? The answers were in all media as well as newspapers but on the inside pages. You can see one of the article sharing the details here. No wonder Vivek Kaul also shared his take on the way things will hopefully go for the next quarter which seems to be a tad optimistic from where we are atm.

Formal sector issues

Just today it was published that output from eight formal sectors of the economy who make the bulk of the Indian economy were down on a month to month basis . This means all those apologists for the Government who said that it was ok that the Govt. didn t give the 20 lakh crore package which was announced. In fact, a businessman from my own city, a certain Prafull Sarda had asked via RTI what happened to the 20 lakh crore package which was announced? The answers were in all media as well as newspapers but on the inside pages. You can see one of the article sharing the details here. No wonder Vivek Kaul also shared his take on the way things will hopefully go for the next quarter which seems to be a tad optimistic from where we are atm.

Eight Sectors declining in Indian Economy month-on-month CNBC TV 18

Eight Sectors declining in Indian Economy month-on-month CNBC TV 18

load-one.cmds:

load $ 1 apply-formula y2=y**2 /extra-columns=1 flag /flags=processedWhen this script is run with the name of a spectrum file as argument, it loads it (replaces

$ 1 by the first argument, the file name), adds a column y2 containing the square of the y column, and flag it with the processed flag. This is not absolutely necessary, but it makes it much easier to refer to all the spectra when they are processed. Then to process all the spectra, one just has to run the following commands:

run-for-each load-one.cmds Spectrum-1.dat Spectrum-2.dat Spectrum-3.dat average flagged:processed apply-formula y2=(y2-y**2)**0.5 dataset-options /yerrors=y2The

run-for-each command runs the load-one.cmds script for all the spectra (one could also have used Spectra-*.dat to not have to give all the file names). Then, the average averages the values of the columns over all the datasets. To be clear, it finds all the values that have the same X (or very close X values) and average them, column by column. The result of this command is therefore a dataset with the average of the original \(y\) data as y column and the average of the original \(y^2\) data as y2 column. So now, the only thing left to do is to use the above equation, which is done by the apply-formula code. The last command, dataset-options, is not absolutely necessary but it signals to QSoas that the standard error of the y column should be found in the y2 column. This is now available as script method-one.cmds in the git repository.

apply-formula, the value $stats.y_stddev corresponds to the standard deviation of the whole y column... Loading the spectra yields just a series of x,y datasets. We can contract them into a single dataset with one x column and several y columns:

load Spectrum-*.dat /flags=spectra contract flagged:spectraAfter these commands, the current dataset contains data in the form of:

lambda1 a1_1 a1_2 a1_3 lambda2 a2_1 a2_2 a2_3 ...in which the

ai_1 come from the first file, ai_2 the second and so on. We need to use transpose to transform that dataset into:

0 a1_1 a2_1 ... 1 a1_2 a2_2 ... 2 a1_3 a2_3 ...In this dataset, values of the absorbance for the same wavelength for each dataset is now stored in columns. The next step is just to use

expand to obtain a series of datasets with the same x column and a single y column (each corresponding to a different wavelength in the original data). The game is now to replace these datasets with something that looks like:

0 a_average 1 a_stddevFor that, one takes advantage of the

$stats.y_average and $stats.y_stddev values in apply-formula, together with the i special variable that represents the index of the point:

apply-formula "if i == 0; then y=$stats.y_average; end; if i == 1; then y=$stats.y_stddev; end" strip-if i>1Then, all that is left is to apply this to all the datasets created by

expand, which can be just made using run-for-datasets, and then, we reverse the splitting by using contract and transpose ! In summary, this looks like this. We need two files. The first, process-one.cmds contains the following code:

apply-formula "if i == 0; then y=$stats.y_average; end; if i == 1; then y=$stats.y_stddev; end" strip-if i>1 flag /flags=processedThe main file,

method-two.cmds looks like this:

load Spectrum-*.dat /flags=spectra contract flagged:spectra transpose expand /flags=tmp run-for-datasets process-one.cmds flagged:tmp contract flagged:processed transpose dataset-options /yerrors=y2Note some of the code above can be greatly simplified using new features present in the upcoming 3.0 version, but that is the topic for another post. About QSoasQSoas is a powerful open source data analysis program that focuses on flexibility and powerful fitting capacities. It is released under the GNU General Public License. It is described in Fourmond, Anal. Chem., 2016, 88 (10), pp 5050 5052. Current version is 2.2. You can download its source code and compile it yourself or buy precompiled versions for MacOS and Windows there.

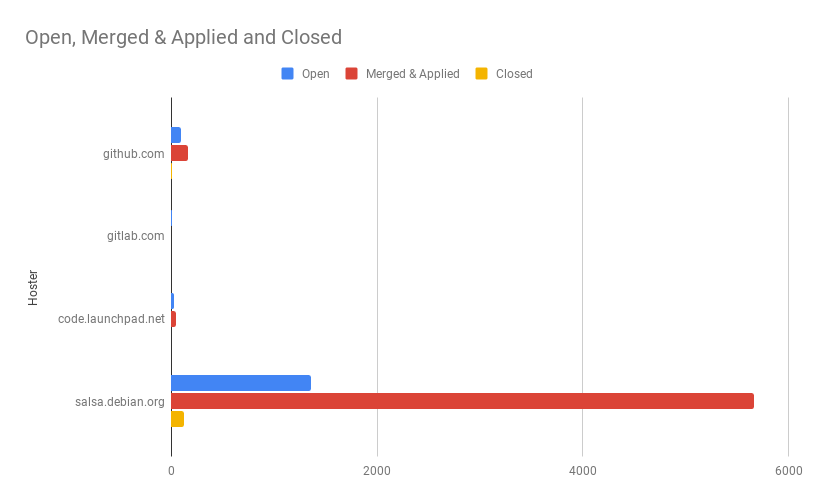

The Debian Janitor is an automated

system that commits fixes for (minor) issues in Debian packages that can be

fixed by software. It gradually started proposing merges in early

December. The first set of changes sent out ran lintian-brush on sid packages maintained in

Git. This post is part of a series about the progress of the

Janitor.

The Janitor knows how to talk to different hosting platforms.

For each hosting platform, it needs to support the platform-

specific API for creating and managing merge proposals.

For each hoster it also needs to have credentials.

At the moment, it supports the GitHub API,

Launchpad API and GitLab API. Both GitHub and Launchpad have only a

single instance; the GitLab instances it supports are gitlab.com and salsa.debian.org.

This provides coverage for the vast majority of Debian packages

that can be accessed using Git. More than 75% of all packages

are available on salsa - although in some cases, the Vcs-Git

header has not yet been updated.

Of the other 25%, the majority either does not declare where

it is hosted using a Vcs-* header (10.5%), or have not

yet migrated from alioth to another hosting platform

(9.7%). A further 2.3% are hosted somewhere on

GitHub (2%),

Launchpad (0.18%) or

GitLab.com (0.15%), in many cases

in the same repository as the upstream code.

The remaining 1.6% are hosted on many other hosts, primarily

people s personal servers (which usually don t have an

API for creating pull requests).

| Hoster | Open | Merged & Applied | Closed |

| github.com | 92 | 168 | 5 |

| gitlab.com | 12 | 3 | 0 |

| code.launchpad.net | 24 | 51 | 1 |

| salsa.debian.org | 1,360 | 5,657 | 126 |

In this graph, Open means that the pull request has been

created but likely nobody has looked at it yet. Merged

means that the pull request has been marked as merged on

the hoster, and applied means that the changes have ended

up in the packaging branch but via a different route (e.g. cherry-picked or

manually applied). Closed means that the pull request was closed without the

changes being incorporated.

Note that this excludes ~5,600 direct pushes, all of which were to salsa-hosted repositories.

See also:

In this graph, Open means that the pull request has been

created but likely nobody has looked at it yet. Merged

means that the pull request has been marked as merged on

the hoster, and applied means that the changes have ended

up in the packaging branch but via a different route (e.g. cherry-picked or

manually applied). Closed means that the pull request was closed without the

changes being incorporated.

Note that this excludes ~5,600 direct pushes, all of which were to salsa-hosted repositories.

See also:

For more information about the Janitor's lintian-fixes efforts, see the landing page.

This is a follow-up from the blog post of Russel as seen here: https://etbe.coker.com.au/2020/10/13/first-try-gnocchi-statsd/. There s a bunch of things he wrote which I unfortunately must say is inaccurate, and sometimes even completely wrong. It is my point of view that none of the reported bugs are helpful for anyone that understand Gnocchi and how to set it up. It s however a terrible experience that Russell had, and I do understand why (and why it s not his fault). I m very much open on how to fix this on the packaging level, though some things aren t IMO fixable. Here s the details.

1/ The daemon startups

First of all, the most surprising thing is when Russell claimed that there s no startup scripts for the Gnocchi daemons. In fact, they all come with both systemd and sysv-rc support:

# ls /lib/systemd/system/gnocchi-api.service

This is a follow-up from the blog post of Russel as seen here: https://etbe.coker.com.au/2020/10/13/first-try-gnocchi-statsd/. There s a bunch of things he wrote which I unfortunately must say is inaccurate, and sometimes even completely wrong. It is my point of view that none of the reported bugs are helpful for anyone that understand Gnocchi and how to set it up. It s however a terrible experience that Russell had, and I do understand why (and why it s not his fault). I m very much open on how to fix this on the packaging level, though some things aren t IMO fixable. Here s the details.

1/ The daemon startups

First of all, the most surprising thing is when Russell claimed that there s no startup scripts for the Gnocchi daemons. In fact, they all come with both systemd and sysv-rc support:

# ls /lib/systemd/system/gnocchi-api.service # it depends on a local MySQL database apt -y install mariadb-server mariadb-client # install the basic packages for gnocchi apt -y install gnocchi-common python3-gnocchiclient gnocchi-statsd uuidIn the Debconf prompts I told it to setup a database and not to manage keystone_authtoken with debconf (because I m not doing a full OpenStack installation). This gave a non-working configuration as it didn t configure the MySQL database for the [indexer] section and the sqlite database that was configured didn t work for unknown reasons. I filed Debian bug #971996 about this [1]. To get this working you need to edit /etc/gnocchi/gnocchi.conf and change the url line in the [indexer] section to something like the following (where the password is taken from the [database] section).

url = mysql+pymysql://gnocchi-common:PASS@localhost:3306/gnocchidbTo get the statsd interface going you have to install the gnocchi-statsd package and edit /etc/gnocchi/gnocchi.conf to put a UUID in the resource_id field (the Debian package uuid is good for this). I filed Debian bug #972092 requesting that the UUID be set by default on install [2]. Here s an official page about how to operate Gnocchi [3]. The main thing I got from this was that the following commands need to be run from the command-line (I ran them as root in a VM for test purposes but would do so with minimum privs for a real deployment).

gnocchi-api gnocchi-metricdTo communicate with Gnocchi you need the gnocchi-api program running, which uses the uwsgi program to provide the web interface by default. It seems that this was written for a version of uwsgi different than the one in Buster. I filed Debian bug #972087 with a patch to make it work with uwsgi [4]. Note that I didn t get to the stage of an end to end test, I just got it to basically run without error. After getting gnocchi-api running (in a terminal not as a daemon as Debian doesn t seem to have a service file for it), I ran the client program gnocchi and then gave it the status command which failed (presumably due to the metrics daemon not running), but at least indicated that the client and the API could communicate. Then I ran the gnocchi-metricd and got the following error:

2020-10-12 14:59:30,491 [9037] ERROR gnocchi.cli.metricd: Unexpected error during processing job

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/gnocchi/cli/metricd.py", line 87, in run

self._run_job()

File "/usr/lib/python3/dist-packages/gnocchi/cli/metricd.py", line 248, in _run_job

self.coord.update_capabilities(self.GROUP_ID, self.store.statistics)

File "/usr/lib/python3/dist-packages/tooz/coordination.py", line 592, in update_capabilities

raise tooz.NotImplemented

tooz.NotImplemented

At this stage I ve had enough of gnocchi. I ll give the Etsy Statsd a go next.

Update

Thomas has responded to this post [5]. At this stage I m not really interested in giving Gnocchi another go. There s still the issue of the indexer database which should be different from the main database somehow and sqlite (the config file default) doesn t work.

I expect that if I was to persist with Gnocchi I would encounter more poorly described error messages from the code which either don t have Google hits when I search for them or have Google hits to unanswered questions from 5+ years ago.

The Gnocchi systemd config files are in different packages to the programs, this confused me and I thought that there weren t any systemd service files. I had expected that installing a package with a daemon binary would also get the systemd unit file to match.

The cluster features of Gnocchi are probably really good if you need that sort of thing. But if you have a small instance (EG a single VM server) then it s not needed. Also one of the original design ideas of the Etsy Statsd was that UDP was used because data could just be dropped if there was a problem. I think for many situations the same concept could apply to the entire stats service.

If the other statsd programs don t do what I need then I may give Gnocchi another go.

Climbing in Guarda

Climbing in Guarda

From: headers. Instead, try out my small new program which can make your Mailman transparent, so that DKIM signatures survive.

Background and narrative

DKIM

NB: This explanation is going to be somewhat simplified. I am going to gloss over some details and make some slightly approximate statements.

DKIM is a new anti-spoofing mechanism for Internet email, intended to help fight spam. DKIM, paired with the DMARC policy system, has been remarkably successful at stemming the flood of joe-job spams. As usually deployed, DKIM works like this:

When a message is originally sent, the author's MUA sends it to the MTA for their From: domain for outward delivery. The From: domain mailserver calculates a cryptographic signature of the message, and puts the signature in the headers of the message.

Obviously not the whole message can be signed, since at the very least additional headers need to be added in transit, and sometimes headers need to be modified too. The signing MTA gets to decide what parts of the message are covered by the signature: they nominate the header fields that are covered by the signature, and specify how to handle the body.

A recipient MTA looks up the public key for the From: domain in the DNS, and checks the signature. If the signature doesn't match, depending on policy (originator's policy, in the DNS, and recipient's policy of course), typically the message will be treated as spam.

The originating site has a lot of control over what happens in practice. They get to publish a formal (DMARC) policy in the DNS which advises recipients what they should do with mails claiming to be from their site. As mentioned, they can say which headers are covered by the signature - including the ability to sign the absence of a particular headers - so they can control which headers downstreams can get away with adding or modifying. And they can set a normalisation policy, which controls how precisely the message must match the one that they sent.

Mailman

Mailman is, of course, the extremely popular mailing list manager. There are a lot of things to like about it. I choose to run it myself not just because it's popular but also because it provides a relatively competent web UI and a relatively competent email (un)subscription interfaces, decent bounce handling, and a pretty good set of moderation and posting access controls.

The Xen Project mailing lists also run on mailman. Recently we had some difficulties with messages sent by Citrix staff (including myself), to Xen mailing lists, being treated as spam. Recipient mail systems were saying the DKIM signatures were invalid.

This was in fact true. Citrix has chosen a fairly strict DKIM policy; in particular, they have chosen "simple" normalisation - meaning that signed message headers must precisely match in syntax as well as in a semantic sense. Examining the the failing-DKIM messages showed that this was definitely a factor.

Applying my Opinions about email

My Bayesian priors tend to suggest that a mail problem involving corporate email is the fault of the corporate email. However in this case that doesn't seem true to me.

My starting point is that I think mail systems should not not modify messages unnecessarily. None of the DKIM-breaking modifications made by Mailman seemed necessary to me. I have on previous occasions gone to corporate IT and requested quite firmly that things I felt were broken should be changed. But it seemed wrong to go to corporate IT and ask them to change their published DKIM/DMARC policy to accomodate a behaviour in Mailman which I didn't agree with myself. I felt that instead I shoud put (with my Xen Project hat on) my own house in order.

Getting Mailman not to modify messages

So, I needed our Mailman to stop modifying the headers. I needed it to not even reformat them. A brief look at the source code to Mailman showed that this was not going to be so easy. Mailman has a lot of features whose very purpose is to modify messages.

Personally, as I say, I don't much like these features. I think the subject line tags, CC list manipulations, and so on, are a nuisance and not really Proper. But they are definitely part of why Mailman has become so popular and I can definitely see why the Mailman authors have done things this way. But these features mean Mailman has to disassemble incoming messages, and then reassemble them again on output. It is very difficult to do that and still faithfully reassemble the original headers byte-for-byte in the case where nothing actually wanted to modify them. There are existing bug reports[1] [2] [3] [4]; I can see why they are still open.

Rejected approach: From:-mangling

This situation is hardly unique to the Xen lists. Many other have struggled with it. The best that seems to have been come up with so far is to turn on a new Mailman feature which rewrites the From: header of the messages that go through it, to contain the list's domain name instead of the originator's.

I think this is really pretty nasty. It breaks normal use of email, such as reply-to-author. It is having Mailman do additional mangling of the message in order to solve the problems caused by other undesirable manglings!

Solution!

As you can see, I asked myself: I want Mailman not modify messages at all; how can I get it to do that? Given the existing structure of Mailman - with a lot of message-modifying functionality - that would really mean adding a bypass mode. It would have to spot, presumably depending on config settings, that messages were not to be edited; and then, it would avoid disassembling and reassembling the message at at all, and bypass the message modification stages. The message would still have to be parsed of course - it's just that the copy send out ought to be pretty much the incoming message.

When I put it to myself like that I had a thought: couldn't I implement this outside Mailman? What if I took a copy of every incoming message, and then post-process Mailman's output to restore the original?

It turns out that this is quite easy and works rather well!

outflank-mailman

outflank-mailman is a 233-line script, plus documentation, installation instructions, etc.

It is designed to run from your MTA, on all messages going into, and coming from, Mailman. On input, it saves a copy of the message in a sqlite database, and leaves a note in a new Outflank-Mailman-Id header. On output, it does some checks, finds the original message, and then combines the original incoming message with carefully-selected headers from the version that Mailman decided should be sent.

This was deployed for the Xen Project lists on Tuesday morning and it seems to be working well so far.

If you administer Mailman lists, and fancy some new software to address this problem, please do try it out.

Matters arising - Mail filtering, DKIM

Overall I think DKIM is a helpful contribution to the fight against spam (unlike SPF, which is fundamentally misdirected and also broken). Spam is an extremely serious problem; most receiving mail servers experience more attempts to deliver spam than real mail, by orders of magnitude. But DKIM is not without downsides.

Inherent in the design of anything like DKIM is that arbitrary modification of messages by list servers is no longer possible. In principle it might be possible to design a system which tolerated modifications reasonable for mailing lists but it would be quite complicated and have to somehow not tolerate similar modifications in other contexts.

So DKIM means that lists can no longer add those unsubscribe footers to mailing list messages. The "new way" (RFC2369, July 1998), to do this is with the List-Unsubscribe header. Hopefully a good MUA will be able to deal with unsubscription semiautomatically, and I think by now an adequate MUA should at least display these headers by default.

Sender:

There are implications for recipient-side filtering too. The "traditional" correct way to spot mailing list mail was to look for Resent-To:, which can be added without breaking DKIM; the "new" (RFC2919, March 2001) correct way is List-Id:, likewise fine. But during the initial deployment of outflank-mailman I discovered that many subscribers were detecting that a message was list traffic by looking at the Sender: header. I'm told that some mail systems (apparently Microsoft's included) make it inconvenient to filter on List-Id.

Really, I think a mailing list ought not to be modifying Sender:. Given Sender:'s original definition and semantics, there might well be reasonable reasons for a mailing list posting to have different From: and and then the original Sender: ought not to be lost. And a mailing list's operation does not fit well into the original definition of Sender:. I suspect that list software likes to put in Sender mostly for historical reasons; notably, a long time ago it was not uncommon for broken mail systems to send bounces to the Sender: header rather than the envelope sender (SMTP MAIL FROM).

DKIM makes this more of a problem. Unfortunately the DKIM specifications are vague about what headers one should sign, but they pretty much definitely include Sender: if it is present, and some materials encourage signing the absence of Sender:. The latter is Exim's default configuration when DKIM-signing is enabled.

Franky there seems little excuse for systems to not readily support and encourage filtering on List-Id, 20 years later, but I don't want to make life hard for my users. For now we are running a compromise configuration: if there wasn't a Sender: in the original, take Mailman's added one. This will result in (i) misfiltering for some messages whose poster put in a Sender:, and (ii) DKIM failures for messages whose originating system signed the absence of a Sender:. I'm going to mine the db for some stats after it's been deployed for a week or so, to see which of these problems is worst and decide what to do about it.

Mail routing

For DKIM to work, messages being sent From: a particular mail domain must go through a system trusted by that domain, so they can be signed.

Most users tend to do this anyway: their mail provider gives them an IMAP server and an authenticated SMTP submission server, and they configure those details in their MUA. The MUA has a notion of "accounts" and according to the user's selection for an outgoing message, connects to the authenticated submission service (usually using TLS over the global internet).

Trad unix systems where messages are sent using the local sendmail or localhost SMTP submission (perhaps by automated systems, or perhaps by human users) are fine too. The smarthost can do the DKIM signing.

But this solution is awkward for a user of a trad MUA in what I'll call "alias account" setups: where a user has an address at a mail domain belonging to different people to the system on which they run their MUA (perhaps even several such aliases for different hats). Traditionally this worked by the mail domain forwarding incoming the mail, and the user simply self-declaring their identity at the alias domain. Without DKIM there is nothing stopping anyone self-declaring their own From: line.

If DKIM is to be enabled for such a user (preventing people forging mail as that user), the user will have to somehow arrange that their trad unix MUA's outbound mail stream goes via their mail alias provider. For a single-user sending unix system this can be done with tolerably complex configuration in an MTA like Exim. For shared systems this gets more awkward and might require some hairy shell scripting etc.

edited 2020-10-01 21:22 and 21:35 and -02 10:50 +0100 to fix typos and 21:28 to linkify "my small program" in the tl;dr1.0. They are also freely available for download from the downloads page.gh cli tool, and this

is all over HN, it might make sense to write a note about my clumsy

aliases and shell functions I cobbled together in the past

month. Background story is that my dayjob moved to GitHub

coming from Bitbucket. From my point of view the WebUI for

Bitbucket is mediocre, but the one at GitHub is just awful

and painful to use, especially for PR processing. So I longed

for the terminal and ended up with

gh

and

wtfutil

as a dashboard.

The setup we have is painful on its own, with several orgs

and repos which are more like monorepos covering several

corners of infrastructure, and some which are very focused on

a single component. All workflows are anti GitHub workflows,

so you must have permission on the repo, create a branch in

that repo as a feature branch, and open a PR for the merge back

into master.

gh functions and aliases

# setup a token with perms to everything, dealing with SAML is a PITA

export GITHUB_TOKEN="c0ffee4711"

# I use a light theme on my terminal, so adjust the gh theme

export GLAMOUR_STYLE="light"

#simple aliases to poke at a PR

alias gha="gh pr review --approve"

alias ghv="gh pr view"

alias ghd="gh pr diff"

### github support functions, most invoked with a PR ID as $1

#primary function to review PRs

function ghs

gh pr view $ 1

gh pr checks $ 1

gh pr diff $ 1

# very custom PR create function relying on ORG and TEAM settings hard coded

# main idea is to create the PR with my team directly assigned as reviewer

function ghc

if git status grep -q 'Untracked'; then

echo "ERROR: untracked files in branch"

git status

return 1

fi

git push --set-upstream origin HEAD

gh pr create -f -r "$(git remote -v grep push grep -oE 'myorg-[a-z]+')/myteam"

# merge a PR and update master if we're not in a different branch

function ghm

gh pr merge -d -r $ 1

if [[ "$(git rev-parse --abbrev-ref HEAD)" == "master" ]]; then

git pull

fi

# get an overview over the files changed in a PR

function ghf

gh pr diff $ 1 diffstat -l

# generate a link to a commit in the WebUI to pass on to someone else

# input is a git commit hash

function ghlink

local repo="$(git remote -v grep -E "github.+push" cut -d':' -f 2 cut -d'.' -f 1)"

echo "https://github.com/$ repo /commit/$ 1 "

github_admin:

apiKey: "c0ffee4711"

baseURL: ""

customQueries:

othersPRs:

title: "Pull Requests"

filter: "is:open is:pr -author:hoexter -label:dependencies"

enabled: true

enableStatus: true

showOpenReviewRequests: false

showStats: false

position:

top: 0

left: 0

height: 3

width: 1

refreshInterval: 30

repositories:

- "myorg/admin"

uploadURL: ""

username: "hoexter"

type: github

-label:dependencies is used here to filter out dependabot PRs in the

dashboard.

Workflow

Look at a PR with ghv $ID, if it's ok ACK it with gha $ID.

Create a PR from a feature branch with ghc and later on merge it with ghm $ID.

The $ID is retrieved from looking at my wtfutil based dashboard.

Security Considerations

The world is full of bad jokes. For the WebUI access I've the full array of pain

with SAML auth, which expires too often, and 2nd factor verification for my account

backed by a Yubikey. But to work with the CLI you basically need an API token

with full access, everything else drives you insane. So I gave in and generated

exactly that. End result is that I now have an API token - which is basically a

password - which has full power, and is stored in config files and environment

variables. So the security features created around the login are all void now.

Was that the aim of it after all?

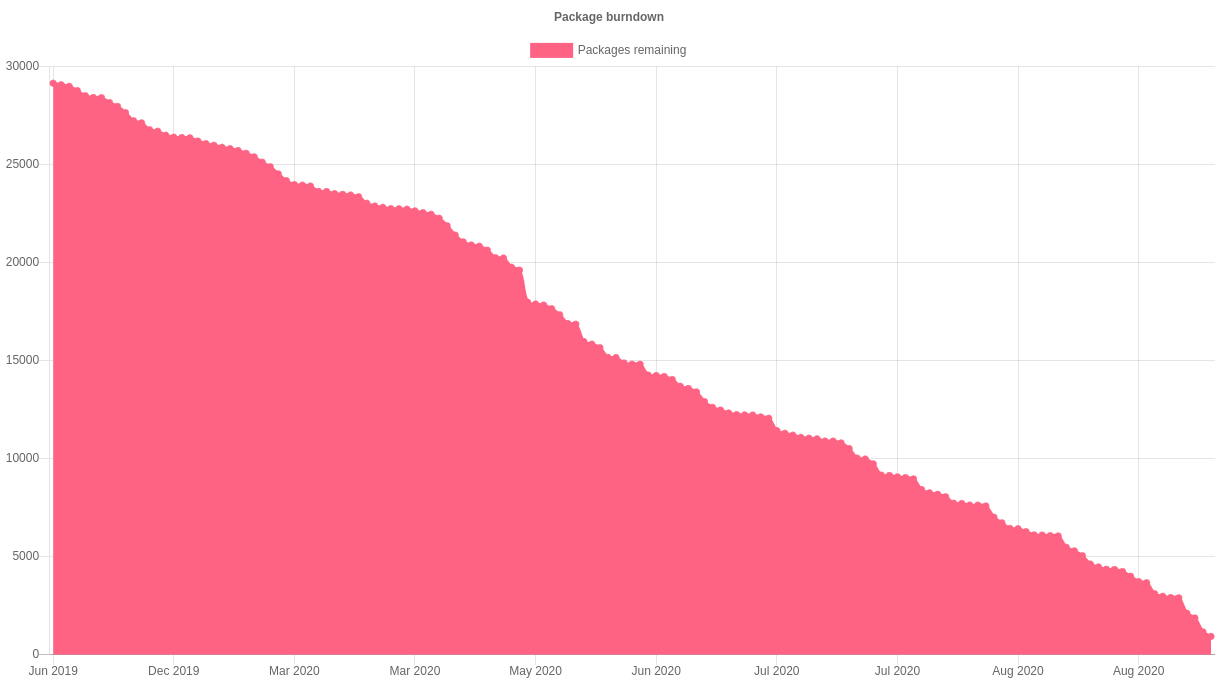

The Debian Janitor is an automated

system that commits fixes for (minor) issues in Debian packages that can be

fixed by software. It gradually started proposing merges in early

December. The first set of changes sent out ran lintian-brush on sid packages maintained in

Git. This post is part of a series about the progress of the

Janitor.

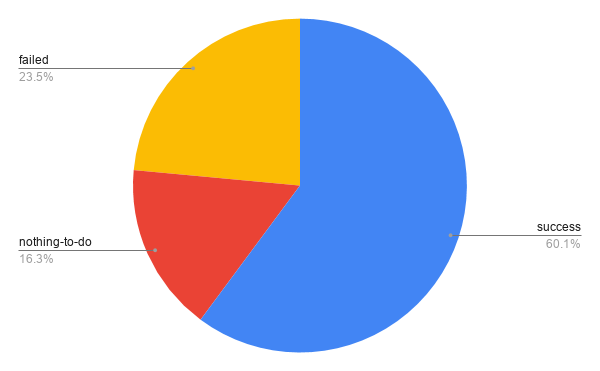

On 12 July 2019, the Janitor started fixing lintian issues in packages in the

Debian archive. Now, a year and a half later, it has processed every one of the

almost 28,000 packages at least once.

As discussed two weeks ago, this has resulted in roughly

65,000 total changes. These 65,000 changes were made to

a total of almost 17,000 packages. Of the remaining packages,

for about 4,500 lintian-brush could not make any improvements.

The rest (about 6,500) failed to be processed for one

of many reasons they are e.g. not yet migrated off

alioth, use uncommon formatting

that can't be preserved or failed to build for one reason or another.

As discussed two weeks ago, this has resulted in roughly

65,000 total changes. These 65,000 changes were made to

a total of almost 17,000 packages. Of the remaining packages,

for about 4,500 lintian-brush could not make any improvements.

The rest (about 6,500) failed to be processed for one

of many reasons they are e.g. not yet migrated off

alioth, use uncommon formatting

that can't be preserved or failed to build for one reason or another.

Now that the entire archive has been processed, packages

are prioritized based on the likelihood of a change being

made to them successfully.

Over the course of its existence, the Janitor has slowly

gained support for a wider variety of packaging methods.

For example, it can now edit the templates for some of

the generated control files. Many of the packages that

the janitor was unable to propose changes for the first

time around are expected to be correctly handled when they

are reprocessed.

If you re a Debian developer, you can find the list of improvements

made by the janitor in your packages by going to

https://janitor.debian.net/m/.

Now that the entire archive has been processed, packages

are prioritized based on the likelihood of a change being

made to them successfully.

Over the course of its existence, the Janitor has slowly

gained support for a wider variety of packaging methods.

For example, it can now edit the templates for some of

the generated control files. Many of the packages that

the janitor was unable to propose changes for the first

time around are expected to be correctly handled when they

are reprocessed.

If you re a Debian developer, you can find the list of improvements

made by the janitor in your packages by going to

https://janitor.debian.net/m/.

For more information about the Janitor's lintian-fixes efforts, see the landing page.

A new release 0.2.2 of the RcppSMC package arrived on CRAN earlier today (and once again as a very quick pretest-publish within minutes of submission).

RcppSMC provides Rcpp-based bindings to R for the Sequential Monte Carlo Template Classes (SMCTC) by Adam Johansen described in his JSS article. Sequential Monte Carlo is also referred to as Particle Filter in some contexts.

This releases contains two fixes from a while back that had not been released, a CRAN-requested update plus a few more minor polishes to make it pass

A new release 0.2.2 of the RcppSMC package arrived on CRAN earlier today (and once again as a very quick pretest-publish within minutes of submission).

RcppSMC provides Rcpp-based bindings to R for the Sequential Monte Carlo Template Classes (SMCTC) by Adam Johansen described in his JSS article. Sequential Monte Carlo is also referred to as Particle Filter in some contexts.

This releases contains two fixes from a while back that had not been released, a CRAN-requested update plus a few more minor polishes to make it pass R CMD check --as-cran as nicely as usual.

Courtesy of CRANberries, there is a diffstat report for this release. More information is on the RcppSMC page. Issues and bugreports should go to the GitHub issue tracker. If you like this or other open-source work I do, you can now sponsor me at GitHub. For the first year, GitHub will match your contributions.Changes in RcppSMC version 0.2.2 (2020-08-30)

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. Please report excessive re-aggregation in third-party for-profit settings.

The Debian Janitor is an automated

system that commits fixes for (minor) issues in Debian packages that can be

fixed by software. It gradually started proposing merges in early

December. The first set of changes sent out ran lintian-brush on sid packages maintained in

Git. This post is part of a series about the progress of the

Janitor.

The bot has been submitting merge requests for about seven

months now. The rollout has happened gradually across the

Debian archive, and the bot is now enabled for all packages

maintained on Salsa, GitLab, GitHub and Launchpad.

There are currently over 1,000 open merge requests, and

close to 3,400 merge requests have been merged so far.

Direct pushes are enabled for a number of large Debian

teams, with about 5,000 direct pushes to date. That covers

about 11,000 lintian tags of varying severities (about 75 different varieties) fixed across Debian.

For more information about the Janitor's lintian-fixes efforts, see the landing page

apt install curl certbot /usr/bin/letsencrypt certonly --standalone -d j.example.com,auth.j.example.com -m you@example.com curl https://download.jitsi.org/jitsi-key.gpg.key gpg --dearmor > /etc/apt/jitsi-keyring.gpg echo "deb [signed-by=/etc/apt/jitsi-keyring.gpg] https://download.jitsi.org stable/" > /etc/apt/sources.list.d/jitsi-stable.list apt-get update apt-get -y install jitsi-meetWhen apt installs jitsi-meet and it s dependencies you get asked many questions for configuring things. Most of it works well. If you get the nginx certificate wrong or don t have the full chain then phone clients will abort connections for no apparent reason, it seems that you need to edit /etc/nginx/sites-enabled/j.example.com.conf to use the following ssl configuration:

ssl_certificate /etc/letsencrypt/live/j.example.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/j.example.com/privkey.pem;Then you have to edit /etc/prosody/conf.d/j.example.com.cfg.lua to use the following ssl configuration:

key = "/etc/letsencrypt/live/j.example.com/privkey.pem"; certificate = "/etc/letsencrypt/live/j.example.com/fullchain.pem";It seems that you need to have an /etc/hosts entry with the public IP address of your server and the names j.example.com j auth.j.example.com . Jitsi also appears to use the names speakerstats.j.example.com conferenceduration.j.example.com lobby.j.example.com conference.j.example.com conference.j.example.com internal.auth.j.example.com but they aren t required for a basic setup, I guess you could add them to /etc/hosts to avoid the possibility of strange errors due to it not finding an internal host name. There are optional features of Jitsi which require some of these names, but so far I ve only used the basic functionality. Access Control This section describes how to restrict conference creation to authenticated users. The secure-domain document [2] shows how to restrict access, but I ll summarise the basics. Edit /etc/prosody/conf.avail/j.example.com.cfg.lua and use the following line in the main VirtualHost section:

authentication = "internal_hashed"Then add the following section:

VirtualHost "guest.j.example.com"

authentication = "anonymous"

c2s_require_encryption = false

modules_enabled =

"turncredentials";

Edit /etc/jitsi/meet/j.example.com-config.js and add the following line:

anonymousdomain: 'guest.j.example.com',Edit /etc/jitsi/jicofo/sip-communicator.properties and add the following line:

org.jitsi.jicofo.auth.URL=XMPP:j.example.comThen run commands like the following to create new users who can create rooms:

prosodyctl register admin j.example.comThen restart most things (Prosody at least, maybe parts of Jitsi too), I rebooted the VM. Now only the accounts you created on the Prosody server will be able to create new meetings. You should be able to add, delete, and change passwords for users via prosodyctl while it s running once you have set this up. Conclusion Once I gave up on the idea of running Jitsi on the same server as anything else it wasn t particularly difficult to set up. Some bits were a little fiddly and hopefully this post will be a useful resource for people who have trouble understanding the documentation. Generally it s not difficult to install if it is the only thing running on a VM.

Next.